Webinar on What AI Means for Teaching

On July 26, 2023 the MLA-CCCC Joint Task Force on Writing and AI held its first webinar, focused on what AI means for teaching, to share information about the work of the task force to date, to provide an overview of the group’s first working paper, to discuss future directions for the task force’s work, and to explain how others can participate by contributing input. Co-chair Elizabeth Losh served as moderator and four other task force members gave brief presentations and answered questions. Over 1,400 people attended, and ASL interpretation was provided. Read more about the webinar below or watch the recording here.

Co-chair Holly Hassel began by discussing the process of forming the task force, including the initial problem statement that encouraged both organizations to take action collaboratively and the charge that shaped the purposes of the group. She noted that the group began producing materials right away, including this quick start guide. She also referred the audience to the task force’s recent submission to the Office of Science and Technology Policy in response to their request for information about national priorities for artificial intelligence.

Then Antonio Byrd followed with a summary of some of the risks to students, faculty, and academic programs that the working paper had identified. Byrd cautioned about potential alienation and mistrust that could be generated by over-emphasizing surveillance and detection, about the injustices that could be perpetuated by the normative reproduction of standardized English by LLMs (large language models like ChatGPT), and about how students might also lose out if they see writing and language study as less valuable. On the faculty side, he warned that teachers might have to make major changes to their pedagogy without adequate time, training, or compensation for their labor and without up-to-date training and support. Like many of the panelists who followed, he emphasized the importance of Critical AI Literacy about the nature, capacities, and risks of AI tools. Byrd also described the dangers of inconsistent policies, policy-making that excluded input from faculty most affected, and a general undermining of academic research and review practices.

Leo Flores identified some significant benefits that were worth considering as well. In addition to offering powerful tools and a massive corpus of linguistic material, LLMs could lower technical and access barriers to translation, coding, DH methods, and writing support. According to Flores, these technologies could also aid in language instruction by providing multiple models quickly and the means for asking questions and receiving answers outside of class time. However, Flores observed that refining translations created by an LLM will still require deeper knowledge about language choices, especially with literary works. In literary study, Flores asserted that LLMs could also have benefits ranging from providing basic interpretations that could serve as low-stakes launching points for discussion to more advanced production of electronic literature or programming code.

Anna Mills emphasized the aspects of the working paper that provided policy guidance. In particular, this involves centering the inherent value of writing as a mode of learning and the importance of process-focused instruction. Building upon the findings of the working paper, she acknowledged that institutional policies will necessarily be complex, given that they involve ethical nuance, specialized labor, and issues of copyright, data use, and privacy. She observed that the working paper’s recommendations also entailed promoting an ethic of transparency around any use of AI text, which builds on existing teaching practices about source citation. She also discussed why the working paper discouraged investing too much faith in flawed AI detectors and too much energy in punishing students rather than supporting them. She closed by emphasizing constructive and collaborative approaches, the strengths of bringing multiple fields into the discussion, and that even seemingly new “prompt engineering” draws on existing forms of rhetorical expertise.

Liz Losh discussed the near future for the task force. She spoke about how generative AI will very likely be integrated into many common composing platforms very soon and how institutions seemed to be surprisingly slow to pivot, despite having managed to pivot rapidly only a few years ago when online instruction was necessitated by the Covid pandemic. Like others on the panel, she emphasized that these technologies had a range of uses, including invention, revision, and reflection.

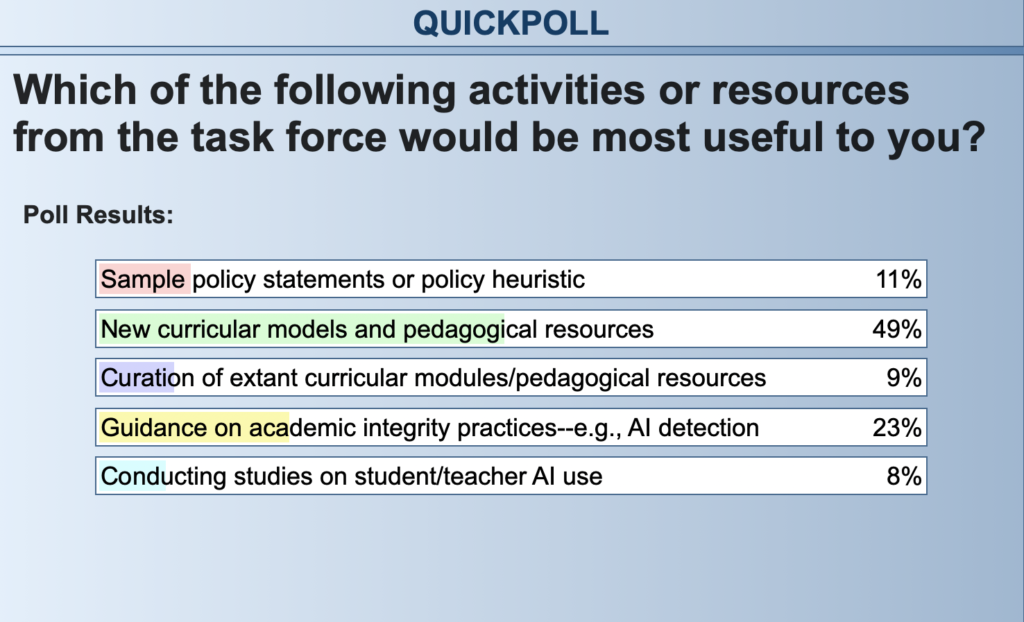

Before a lively question-and-answer sessions, a poll of participants was taken and results shared.